Data-Driven Modeling

Haptic rendering requires a model of the object or sensation you would like to display. Traditional haptic rendering algorithms use a physics-based model to generate the forces or vibrations to be displayed to the user as a function of their position and/or movement. These traditional models are typically hand-tuned to create an approximation of the desired sensation, but are not able to completely match the richness of haptic feedback experienced during interactions with the physical world.

Instead of creating models to generate the touch signals, we take the inverse approach and model the touch signals directly. From neuroscience and perception, we know the types of signals that are generated when we interact with objects in our environment and how we perceive these signals. Therefore, in our research we take a data-driven approach of recording and modeling these signals using electromechanical analogues of our touch sensors, such as force sensors and accelerometers. This process of capturing the feel of interesting haptic phenomena has been coined “Haptography” (= haptic + photography). Haptography, like photography in the visual domain, enables us to quickly record the haptic feel of a real object so that the sensations can be reproduced later.

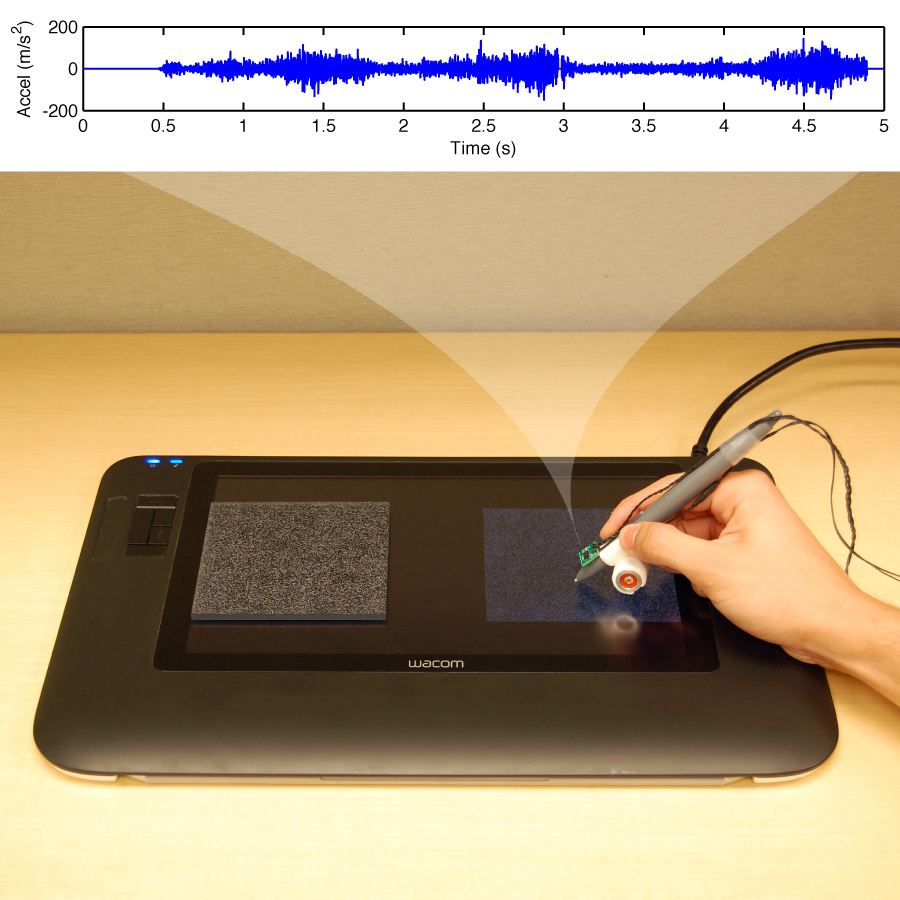

In the past, this data-driven method has been successfully applied to modeling the feel of textured surfaces and recreating that sensation as the user interacted with a smooth tablet screen. We captured the haptic signals by using a custom haptic recording tool that was used to measure the forces and high-frequency vibrations felt when interacting with textured objects. The vibrations felt by the pen when it was dragged across the object encodes information about the object’s texture and roughness. We created mathematical models of these signals, which are then stored and used to create virtual versions of the objects later. During rendering, we attached a voice coil vibration actuator to a stylus, which is used to output the texture vibration signals as the user drags the stylus across the tablet surface.

Preference-Driven Modeling

Data-driven texture modeling and rendering has pushed the limit of realism in haptics. However, the lack of haptic texture databases and difficulties of model interpolation and expansion prevent data-driven methods from capturing a large variety of textures and from customizing models to suit specific output hardware or user needs.

Considering the fact that the evaluation of haptic rendering performance relies on human perception, which serves as the ‘gold standard’, we propose to use human’s perceptual preference during the interaction with a set of virtual texture candidates to guide the haptic texture modeling, which we call “preference-driven” modeling.

The preference-driven texture modeling is a two-stage process – texture generation and interactive texture search. The texture generation contains a generative adversarial network (GAN) for mapping the latent space into texture models. The texture search evolves the texture models generated by the GAN using an evolutionary algorithm (CMA-ES) given input about user’s preference. Our study showed that the proposed method can create realistic virtual counterparts of real textures without additional haptic data recording.

Multi-Modal Modeling in Tool-Surface Interactions

Humans depend on multisensory perception when interacting with textured surfaces. Vision can be easily tricked in roughness perception by printing a textured pattern on the surface so that its true roughness can be determined only by touch. Yet, touch struggles to determine if an object is hollow, which is more easily distinguished through sound. Neuroscience research has attempted to reduce multimodal perception to a single modality, but experiments have shown that unimodality still limits human’s understanding and perception of the object. Creating a multisensory experience in virtual environments is critical, but challenging. Specifically, auditory feedback has often been neglected in favor of vision and touch although it plays a significant role in perception.

We propose a data-driven method of modeling and rendering sounds produced during tool-surface interactions with textured surfaces in real time. We used a statistical learning method to model the tool-texture sounds, which is more robust and fast compared to other sound modeling methods and is capable of handling the grainy and bursty sounds in tool-surface interactions. The results of user study showed that adding the virtual sound into the tool-surface interaction greatly increases the realism of the interaction. Additionally, it showed that with the existence of haptic cues, the virtual sound can improve human’s perception accuracy on texture roughness and hardness while the perception on slipperiness depends mainly on touch.