Improving instructions per cycle (IPC) for single-thread applications with stagnating clock frequency requires dealing with fundamentally difficult constraints, e.g., branch mispredictions and cache misses. Overcoming these constraints, while difficult, promises to bring substantial increases in IPC. This has now become a necessity to support the scale of growing data. Fast processors, TPUs, accelerators, and heterogeneous architectures, have enabled fast computation due to which memory performance has become the bottleneck in many applications. Many applications are memory bound and the problem of reducing the latency of memory accesses must be addressed. Several emerging memory technologies such 3D-Stacked DRAM and Non-volatile Memory attempt to address memory bottleneck issues from a hardware perspective, but with a tradeoff among bandwidth, power, latency, and cost.

instructions per cycle (IPC) for single-thread applications with stagnating clock frequency requires dealing with fundamentally difficult constraints, e.g., branch mispredictions and cache misses. Overcoming these constraints, while difficult, promises to bring substantial increases in IPC. This has now become a necessity to support the scale of growing data. Fast processors, TPUs, accelerators, and heterogeneous architectures, have enabled fast computation due to which memory performance has become the bottleneck in many applications. Many applications are memory bound and the problem of reducing the latency of memory accesses must be addressed. Several emerging memory technologies such 3D-Stacked DRAM and Non-volatile Memory attempt to address memory bottleneck issues from a hardware perspective, but with a tradeoff among bandwidth, power, latency, and cost.

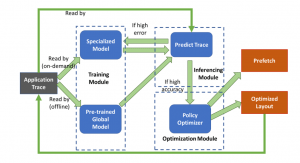

Rather than redesigning existing algorithms to suit specific memory technology, we propose to develop Machine Learning (ML) based approach that automatically learns access patterns which can be used to prefetch data. A practical ML-based prefetcher implementation requires dealing with certain challenges that we address (i) size of the machine learning model (small – to enable fast inference and ensure feasibility of implementation); (ii) training with large traces to obtain a (small) model that is highly accurate in predicting memory accesses; (iii) ensuring real-time inference; and (iv) retraining the model online, on-demand to learn application specific models, which would require fast learning with small amount of data.

We proposed a compressed LSTM that achieves a factor of O(n/logn) in reduction of parameters without compromising accuracy, thus overcoming the challenges listed earlier. We also proposes the RNN Augmented Offset Prefetcher (RAOP) framework, which consists of an predictor based on the compressed LSTM and an offset prefetching module. By leveraging the RNN predicted access as a temporal reference, RAOP improves prefetching performance by executing offset prefetching for both the current address and the RNN predicted address.

Recently, we propose a novel clustering approach called Delegated Model Clustering that can reliably cluster the applications. For each cluster, we train a compact meta-LSTM model that can quickly adapt to any application in the cluster. Transformer-based memory access prediction framework is being studied to achieve higher prediction accuracy and higher IPC improvement.

AREAS OF INTEREST:

Data Prefetching, Memory Access Prediction, Computer Architecture, Time Series Analysis, Sequence Modeling, Meta Learning, Reinforcement Learning

RECENT PUBLICATIONS:

Disclaimer: The following papers may have copyright restrictions. Downloads will have to adhere to these restrictions. They may not be reposted without explicit permission from the copyright holder. Any opinions, findings, and conclusions or recommendations expressed in these materials are those of the author(s) and do not necessarily reflect the views of the sponsors including National Science Foundation (NSF), Defense Advanced Research Projects Agency (DARPA), and any other sponsors listed in the publications.

1. Zhang, Pengmiao; Srivastava, Ajitesh; Brooks, Benjamin; Kannan, Rajgopal; Prasanna, Viktor K., C-MemMAP: clustering-driven compact, adaptable, and generalizable meta-LSTM models for memory access prediction, International Journal of Data Science and Analytics, 2021

2. Zhang, Pengmiao; Srivastava, Ajitesh; Brooks, Benjamin; Kannan, Rajgopal; Prasanna, Viktor K., RAOP: Recurrent Neural Network Augmented Offset Prefetcher, The International Symposium on Memory Systems (MEMSYS), 2020

3. Srivastava, Ajitesh; Zhang, Pengmiao; Wang, Ta-yang; De Rose, Cesar Augusto F; Kannan, Rajgopal; Prasanna, Viktor K., MemMAP: Compact and Generalizable Meta-LSTM Models for Memory Access Prediction, 2020

4. Srivastava, Ajitesh; Lazaris, Angelos; Brooks, Benjamin; Kannan, Rajgopal; Prasanna, Viktor K., Predicting memory accesses: the road to compact ML-driven prefetcher, Proceedings of the International Symposium on Memory Systems – MEMSYS ’19, pp. 461-470, 2019

Click here for the complete list of publications of all Labs under Prof. Viktor K. Prasanna.